Introduction

On January 6th at CES, NVIDIA officially unveiled NVIDIA® Project DIGITS, describing it as a personal AI supercomputer that gives AI researchers, data scientists, and students access to the power of the NVIDIA Grace Blackwell platform. The announcement caught my eye because “personal AI supercomputer” isn’t a phrase you hear every day, and I was curious to see how they’re packing that much compute into a workstation-friendly box.

About Petaflops

If you’re new to the concept of petaflops, it’s worth noting that supercomputing is measured in floating-point operations per second (FLOPS). To compare it to everyday computing benchmarks, I found this article which states :

petaflops are a measure of a computer’s processing speed equal to a thousand trillion FLOPS. That means a 1-petaflop system can handle a quadrillion (10¹⁵) floating-point operations every second — potentially about a million times more processing power than the fastest laptop.

which sounds like a lot, and getting that kind of a power in 3000$ as you’d see in the pricing section all the way below, feels like a great deal.

I found this list of top of the line supercomputers with hundreds and thoughts of Petaflops performance, used by Governments and Security establishments.

And also this list where petaflops are under 10, used probably by universities and/or research facilities.

but I doubt any of these can sit on a desk like Project Digits.

What is Project DIGITS?

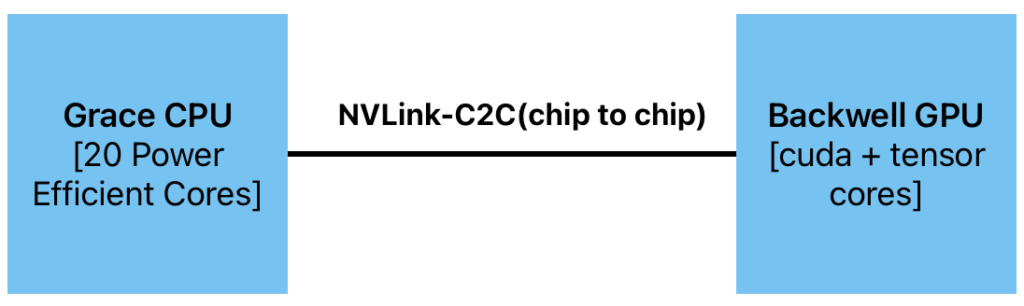

From what NVIDIA has shared, Project DIGITS is a hybrid arrangement that couples a Grace CPU (featuring 20 power-efficient cores) with a Backwell GPU (equipped with CUDA and next-gen Tensor Cores). These two components connect via NVLink-C2C, ensuring that data moves seamlessly between CPU and GPU without becoming a bottleneck.

In everyday terms, that makes it a powerful AI workstation you can plug into a standard office outlet — one that reportedly supports training(and inference) on models with up to 200 billion parameters. The 1 Petaflop figure refers to what the setup can achieve at FP4 precision, which is frequently used for large-scale neural network training where you need a lot of parallel computation.

Why Is It Interesting?

- Up to 1 Petaflop at FP4

As mentioned above, a petaflop is a measure of speed we typically associate with supercomputers. If Project DIGITS can genuinely deliver that on a single workstation, that’s certainly impressive. - Standard Office Power

NVIDIA states that this device only needs a typical wall socket and no specialized power infrastructure to run it, which makes it more feasible for smaller labs or home offices. - Grace Blackwell Platform

Leveraging NVIDIA’s broader hardware and software ecosystem should, at least in theory, streamline AI development. You can expect support for popular frameworks like PyTorch and TensorFlow, along with NVIDIA’s own suite of data science tools.

Possible Use Cases

- AI Model Prototyping

Great for quickly iterating on new model architectures before scaling them up in a production environment. - Large Language Model Training

With the alleged ability to handle up to 200B parameters, you can tackle GPT-style or similarly massive models locally. - High-Performance Data Science

If your data pipeline involves large datasets, having ample CPU+GPU horsepower can speed up everything from ETL to final analytics. - Inference Workloads

For real-time services — like chatbots, recommender systems, or computer vision tasks — a dedicated on-prem machine could reduce latency significantly.

Availability and Pricing

NVIDIA expects Project DIGITS to be available in May 2025, with a starting price of around $3,000. It’s not cheap, but if you’re used to monthly cloud GPU bills for large-scale projects, you might find it cost-competitive over time — especially if you want on-prem control and consistent access to robust compute.

Conclusion

It’s early days for Project DIGITS, and I’m looking forward to getting a closer look at real-world benchmarks and user feedback. If NVIDIA can deliver on its promise of making HPC-level performance more accessible — especially for AI researchers, data scientists, and students — then a personal AI supercomputer might not be as far-fetched as it sounds.

At the very least, it’s a reminder that petaflop-scale compute is inching closer to the workstation realm, offering a middle ground between the limitations of standard consumer GPUs and the complexity of remote HPC clusters. Only time will tell if this balance is enough to sway folks who rely on cloud solutions, but it’s a development worth keeping on your radar as AI workloads continue to grow.

Resources

Nvidia Announcement : https://nvidianews.nvidia.com/news/nvidia-puts-grace-blackwell-on-every-desk-and-at-every-ai-developers-fingertips

🌟 Stay Connected! 🌟

I love sharing ideas and stories here, but the conversation doesn’t have to end when the last paragraph does. Let’s keep it going!

🔹Website : https://madhavarora.net

🔹 LinkedIn for professional insights and networking: https://www.linkedin.com/in/madhav-arora-0730a718/

🔹 Twitter for daily thoughts and interactions:https://twitter.com/MadhavAror

🔹 YouTube for engaging videos and deeper dives into topics: https://www.youtube.com/@aidiscoverylab

Got questions or want to say hello? Feel free to reach out to me at madhavarorabusiness@gmail.com. I’m always open to discussions, opportunities, or just a friendly chat. Let’s make the digital world a little more connected!